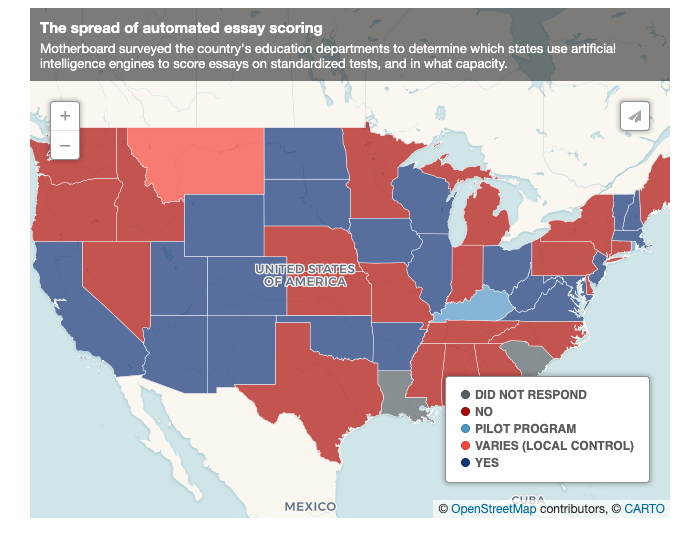

According to the survey, the artificial intelligence paper scoring system based on natural language processing has been officially introduced into the scoring work of official exams by at least 21 states in the United States.

Moreover, the full moon is not only foreign, but the “machine assessor” in China has already been put into the examination room.

As early as 2016, China Education Testing Center and the University of Science and Technology have established a joint laboratory to jointly carry out research on artificial intelligence technology in marking, proposition, examination evaluation and analysis. Moreover, in 2017, Hubei Fuyang has officially introduced the machine scoring system as a scoring aid in the work of the senior high school entrance examination.

Behind these machine assessors, there is a huge amount of information for reference. Even if there are countless papers in a day, it will not affect the efficiency and fairness of the scoring because of fatigue and unpleasant emotions.

But is this really the case? Recently, a report from VICE showed that these AI reviewers did not actually have the justice that everyone imagined.

▲ Image from: VICE

Inevitable algorithmic bias

The AI assessment is actually not as fair as everyone imagined. The first problem first is algorithmic bias.

In fact, the education industry has been trying to eliminate the background of different languages.The subconscious bias generated by the students, but this problem is quite serious in the artificial intelligence marking system.

The E-rater machine scoring system provided by ETS (US Non-Professional Testing Service Center) is currently providing scoring references for GRE, TOEFL and other exams. ETS is also among the many machine scoring system providers, and very few will provide bias research reports.

David Williamson, Vice President, New Product Development, ETS, said:

In fact, the existence of algorithmic bias in the scoring system is a ubiquitous problem.However, most providers will not be open to the public like us.

In many years of research, ETS found that the machine scoring system would “prefer” students from mainland China, giving scores higher overall than human reviewers. At the same time, groups such as African Americans, Arabic students, and Spanish students are more susceptible to prejudice from the machine, and the scores they get will be lower.

▲Image from: VICE

To this end, ETS conducted an in-depth study of the algorithm in 2018, and finally found the reason.

In the case of GRE (American Graduate Entrance Examination), students from mainland China have a longer length and will use a lot of complicated vocabulary in the paper, which makes the machine mistakenly believe that the level of the paper will be higher than the average level, thus giving Get more points. Even though these complex sentence patterns are not too much related to the subject matter of the paper in the eyes of the human judges, it is obvious that the pre-reported essays are applied.

In contrast, the language style of African-American and Arabic-speaking students tends to be more simple and straightforward, making it difficult to obtain higher scores in the machine scoring system.

These biases are actually directly reflected in the scores. During the test, among the group of students of equal level, the E-rater machine scoring system gave the students in mainland China an average score of 1.31, while the African Americans Then there is only 0.81 points.

Of course, if you have a GRE reader, don’t worry, because the system is only just for the human scorer to “help”, the final paper is still determined by humans.

In addition to ETS, New Jersey Institute of Technology has discovered an algorithmic bias in a machine scoring system used by its own.

The New Jersey Institute of Technology had previously used a scoring system called ACCUPLACER to determine whether first-year students needed additional tutoring, but later the study by the technical committee found that the papers written by Asian-American and Hispanic students would exist. Prejudice does not give a fair judgment.

Even a “snack” paper can get a high score

If the algorithmic bias only affects the score, the impact on the fair is not particularly large, then the machine scoring system has a more serious flaw.

It’s just that you can’t identify it.

A few years ago, MIT’s preparatory director Les Perelman and a group of students used the paper language generator BABEL to piece together several papers.

These papers are not the same as normal papers. Although they use a lot of advanced vocabulary and complex sentence patterns, most of them are prefaces that are not used in the preface, and can even be described as “the shit”.

They submitted these papers to several different machine scoring systems for scoring. Unexpectedly, these papers have achieved good results.

What is even more unexpected is that VICE also replicated the experiment a few years later, and the results were strikingly similar. Elliot, a professor at the New Jersey Institute of Technology, said:

The current paper scoring system emphasizes the accuracy of grammar and the standardization of written language. But it is hard to find out the sharp views and special insights of students. However, these two points are all seen by human judges.The most valuable part of the paper.

At present, there are already many people in the education field who have questioned these machine changers, and Australia has also announced that it will temporarily put aside the machine scoring system in the standard test.

Sarah Myers West from the AI Now Institute says that, as in the broader field of artificial intelligence applications, it is a long-lasting battle to eliminate algorithmic bias in the scoring system.

However, Elliot, a professor from the New Jersey Institute of Technology, or Sarah Myers West from the AI Now Institute, is a supporter of the development of the machine scoring system. Because this is indeed a future direction, as Cydnee Carter, a Utah-based exam development assessor, says that evaluating papers through machines can save a lot of manpower and material resources for the country’s education system. It can provide timely academic feedback to students and teachers, greatly improving the efficiency of education.

It’s just that these machine assessors are only acting as an auxiliary role before they can be fair and just.