Faced with the failure of Moore’s Law and the revival of AI, Intel had to transform.

Editor’s note: This article is from WeChat public account “ iFeng Technology ” (ID: ifeng_tech ), Author Xiao Yu.

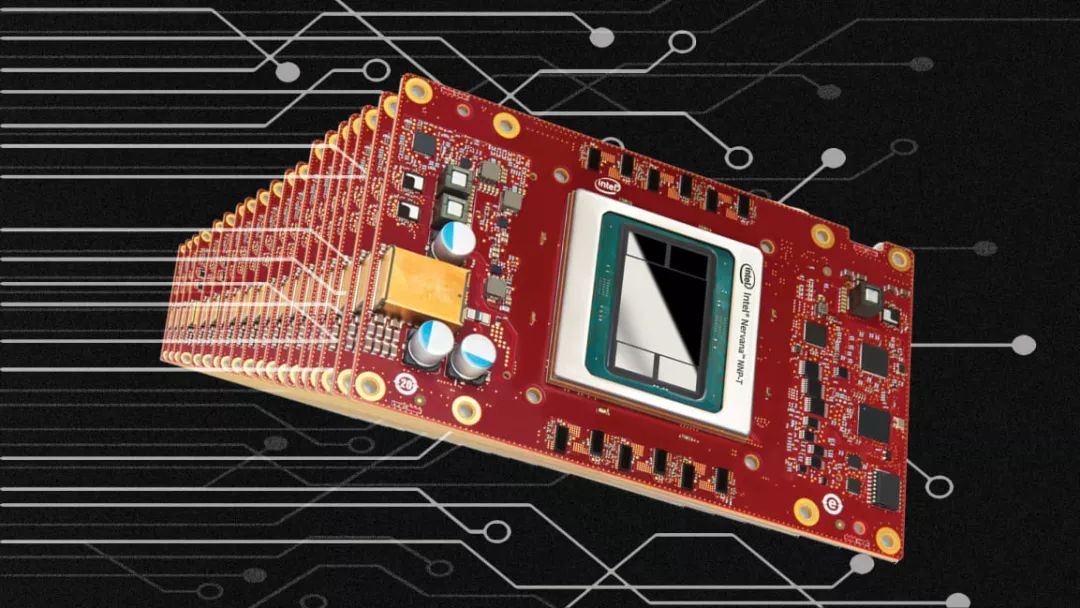

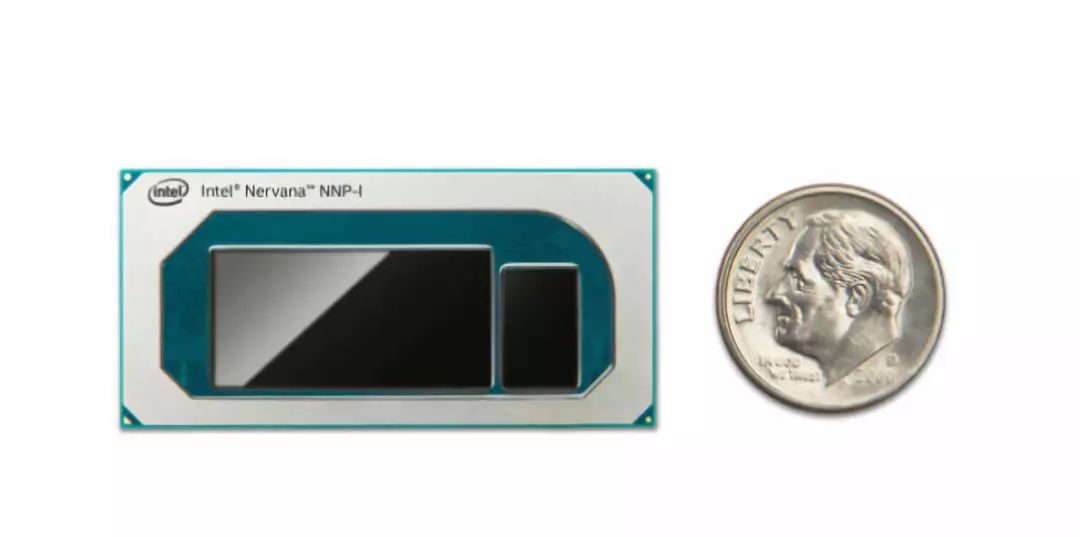

Intel Nervana chip for training the nervous system

The in-depth article published by the business magazine “Fast Company” states that in the context of Moore’s Law being faced with failure and artificial intelligence (AI) renaissance, the traditional chip giant Intel Corporation had to transform and no longer rely on CPU alone. Acquire chips suitable for training neural networks through acquisitions. At the same time, Intel began to change its long-held belief that outsourcing chip manufacturing reduces costs and eases delivery pressure.

The following is the full text of the article:

When the author walked to the Intel Visitor Center in Santa Clara, California, a large group of Korean teenagers ran off the bus they were riding in, and cheerfully focused on taking selfies and taking photos in front of the huge Intel logo. This may be an avid fan you can only see on Apple or Google, but why does Intel have it?

Don’t forget, in the name of Silicon Valley, “Silicon” is the symbol of the chip, and its typical representative is Intel. The company’s processors and other technologies have provided many underlying performance support for the PC revolution. Today, Intel is “51 years old” and it still retains some “star charm.”

Intel’s profound changes

However, Intel is also undergoing a period of profound change: reshaping the company’s culture and the way products are produced. As before, Intel ’s core products are still the “brains” of desktops, laptops, tablets, and servers—microprocessors, which use specialized processes to etch millions or billions of silicon wafers Transistors. Each transistor has “on” and “off” states to correspond to the binary numbers “1” and “0” of the computer.

Since the 1950s, Intel has steadily improved processing performance by continuously adding more transistors to silicon wafers. This increase is so stable that Intel’s joint innovationThe founder Gordon Moore made a famous prediction in 1965: the number of transistors on a chip doubles every two years, which is “Moore’s Law”. Analysts said that Moore’s Law has been in place for so many years, but Intel’s strategy of increasing transistors has reached a point of “diminishing returns.”

At the same time, the market ’s demand for higher processing performance has never been higher. Analysts say AI is now widely used in core business processes in almost every industry. Its renaissance has brought computing performance to an “over-demand” situation. Neural networks require a large amount of computing performance, and they will only show the best results when computer networks work together. Their applications far exceed the PCs and servers that established Intel as a giant at the beginning.

“Whether it’s a smart city, a retail store, a factory, a car, or a home, all of this is a bit like a computer today.” Bob Swan said he has been Intel’s president since January this year.

The structural changes brought by AI and Intel’s ambition to expand its business have forced Intel to adjust the design and features of some of its chips. Intel is developing and designing software and chips that work together, and it may even acquire companies to keep pace with the changing computing world. As AI gradually enters corporate and personal life, and the industry increasingly depends on Intel to provide chip performance to drive AI, Intel’s further transformation is imperative.

Death of Moore’s Law

At present, it is mainly large technology companies with data centers that use AI technology in their main businesses, some of which provide AI as a cloud service to enterprise customers, such as Amazon, Microsoft and Google. However, AI has begun to spread to other large enterprises, which train AI models to analyze massive data and take corresponding measures.

This transformation requires great computing performance to support it, and AI’s “craving” for computing performance is exactly the rise of AI and the collision of Moore’s Law.

For decades, Moore’s prediction in 1965 was significant for the entire technology industry. Hardware manufacturers and software developers have traditionally linked their product roadmaps to the performance they will get from next year’s CPUs. It can be said that Moore’s Law makes everyone “dance the same dance music”.

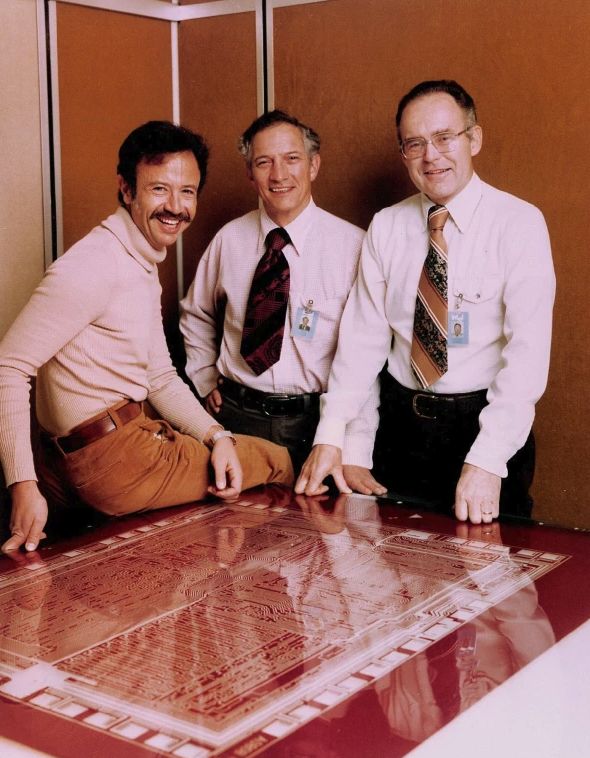

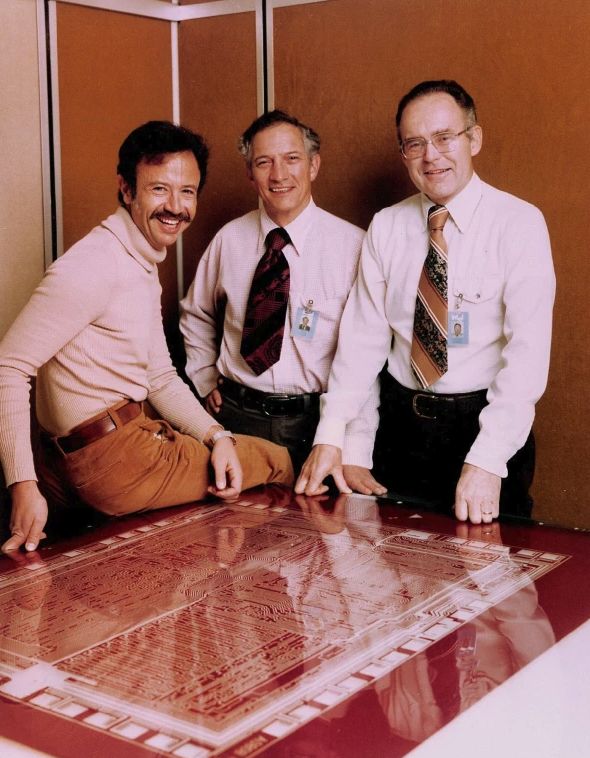

Intel co-founder Moore (right)

Moore’s Law also heralds Intel’s promise of chip performance improvements every year. For most of the past, Intel has fulfilled this promise by finding ways to add more transistors to silicon wafers, but it has become increasingly difficult.

“Chip factories are about to lose our ability to provide performance improvements,” said Patrick Moorhead, principal analyst at market research firm Moor Insights & Strategy. . “

Although it is still possible to add more transistors to the silicon wafer, the cost is getting higher and longer, and the performance gains may not be enough to meet the needs of computer scientists to build neural networks. For example, the largest neural network known in 2016 has 100 million parameters, and the largest neural network so far in 2019 has 1.5 billion parameters, which increased by an order of magnitude in just a few years.

Compared with the previous computing paradigm, AI presents a very different growth curve, which pressures Intel to find ways to improve the processing performance of its chips.

However, Swann sees AI more as an opportunity rather than a challenge. He acknowledged that data centers may be Intel’s main benefit market because companies need powerful chips for AI training and inference, but he believes that Intel also has more opportunities to sell AI-compatible chips such as smart cameras and sensors to small devices. For these devices, what sets them apart is their small size and low power consumption, not the original performance of the chip.

“I think we need to speed up development on three technologies: one is AI, one is 5G, and the other is an automation system equivalent to a mobile version of the computer.” Swan said that he is based in Brian (Krzanich) took over as Intel CEO last year after leaving due to extramarital affairs.

Intel CEO Swan

In an ordinary large conference room at Intel’s headquarters, Swan divided Intel’s business into two columns on a white board in front of the conference room. The left column is the PC chip business, which currently accounts for half of Intel’s revenue; the right column is the data center business, including the emerging Internet of Things, self-driving cars and network equipment markets.

“The world we enter requires more and more data, which requires stronger processing, storage, and inspection.Search capabilities, faster data movement, analysis and intelligence to improve data relevance. “Swan said.

Swan is no longer fighting for a 90% share in the $ 50 billion data center market. Self-driving cars and network equipment. He described this strategy as “starting with our core competitiveness and then inventing in some areas, but at the same time expanding our existing business.”

This may also be a way for Intel to quickly get out of the shadow of failed smartphone chip attempts. The company recently abandoned its large-scale investment in smartphone baseband and sold it to Apple. In the field of smart phone chips, Qualcomm’s long-term dominance is like Intel dominating the PC chip market.

By 2023, the IoT market is expected to reach $ 2.1 trillion, which includes chips for robots, drones, cars, smart cameras, and other mobile devices. Although Intel’s share of the IoT chip market is growing at a double-digit rate year-on-year, the share of IoT in Intel’s total revenue is still only 7% today.

Data center is Intel’s second largest business, contributing 32% of the company’s revenue, second only to the PC chip business (accounting for 50% of revenue). If you say which business is most affected by AI, the data center is the first. This is why Intel has been adjusting its strongest CPU series Xeon, the purpose is to make it suitable for processing machine learning tasks.

In April of this year, Intel added deep learning acceleration technology (DL Boost) to the second-generation Xeon CPUs, which provides stronger performance for neural networks while neglecting the loss of accuracy. For the same reason, Intel will start selling two chips that are good at running large machine learning models next year.

AI revival highlights chip short board

By 2016, the prospect that neural networks will be used in various applications has become clear, from product recommendation algorithms to natural language processing for customer service robots. Like other chip makers, Intel knows that the company must provide its large customers with a hardware and software chip specifically designed for AI. This chip will be used to train AI models and make inferences from massive data.

At that time, Intel was missing such a chip. The industry believes that Intel’s Xeon processor is very good at analysis, but the AI graphics processor (GPU) produced by Intel rival NVIDIA is more suitable for training AI models. This is an important perspective that has affected Intel’s business.

So Intel started the acquisition in 2016, buying a deep learning chip company called Nervana for $ 400 million, which is already developing ultra-fast chips designed to train AI.

Three years have passed. Looking back, this seems to be IntelWise move. At an event in San Francisco this November, Intel announced two new Nervana neural network processors, one designed to run neural network models to infer meaning from large amounts of data, and the other to train neural networks. Intel worked with two major customers, Facebook and Baidu, to help verify its chip designs.

Nervana CEO Rao

Nervana is not the only Intel acquisition in 2016. In the same year, Intel also acquired another company, Movidius, which has been developing small chips that can run computer vision models inside devices such as drones or smart cameras. Intel’s Movidius chip sales are not high, but have been growing rapidly and have opened up the IoT market that Swan is excited about. At the San Francisco event, Intel also announced the new Movidius chip, which will be introduced to the market in the first half of next year.

Nervana founder and CEO Naveen Rao said that many Intel customers are engaged in AI computing at least to some extent in conventional Intel CPUs used by data center servers, but they must work together to meet neural networks Demand is not easy. On the other hand, Nervana chips include multiple connections so they can easily collaborate with other processors in the data center.

“Now, I can call up my neural network and divide them into multiple small systems that can work together,” Rao said, “so we can have the entire server rack, or four racks, Solve a problem together. “

In 2019, Intel expects to generate $ 3.5 billion in revenue from AI-related products. Currently, only a few Intel customers are using Nervana chips, but its user base is likely to expand significantly next year.

Long-term chip concept change

The launch of the Nervana chip represents Intel’s deep-rooted belief that the chip giant used to believe that one CPU can handle all the computing tasks that a PC or server needs to do. This ubiquitous belief changed with the game revolution, which required extreme computing power to display complex graphics.

It is reasonable to leave the graphics processing to the GPU so that the CPU does not need to take on this part of the task. Swan saidA few years ago, Intel started integrating GPUs in CPUs, and will release independent GPUs for the first time next year.

The same idea applies to AI models. In the data center server, a certain amount of AI tasks can be processed by the CPU, but as the amount of tasks increases, it is more efficient to transfer it to another dedicated chip. Intel has been investing in the design of new chips that integrate the CPU with a series of dedicated acceleration chips to meet customer performance and workload requirements.

“When you design a chip, you need to use the power of the system to solve the problem, which often requires more chips, not a CPU can do.” Swan said.

In addition, Intel now relies more on software to push the performance and power of its processors to new levels, which has changed the balance within Intel. An analyst said that at Intel, software development is currently “on an equal footing” with hardware development.

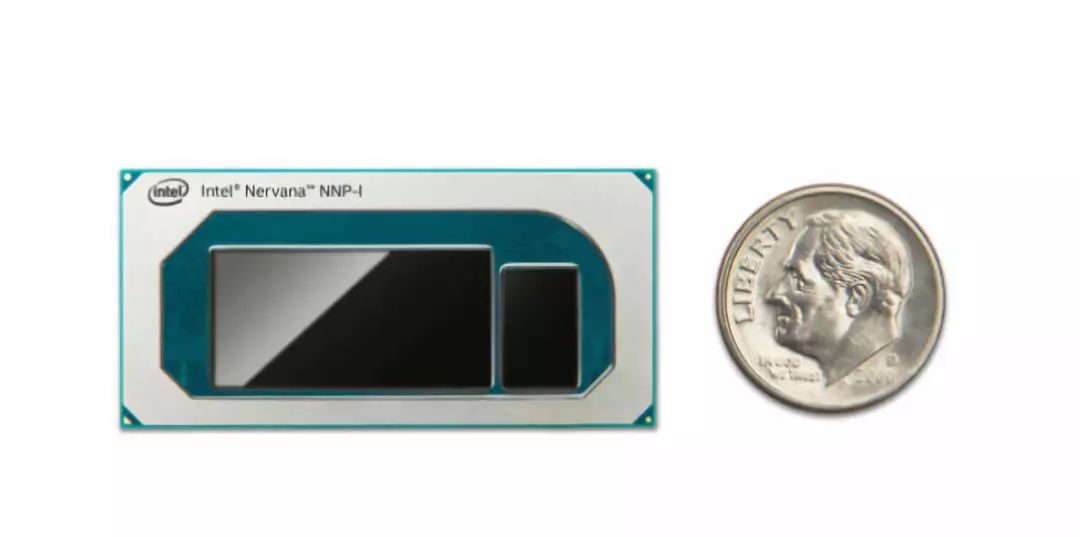

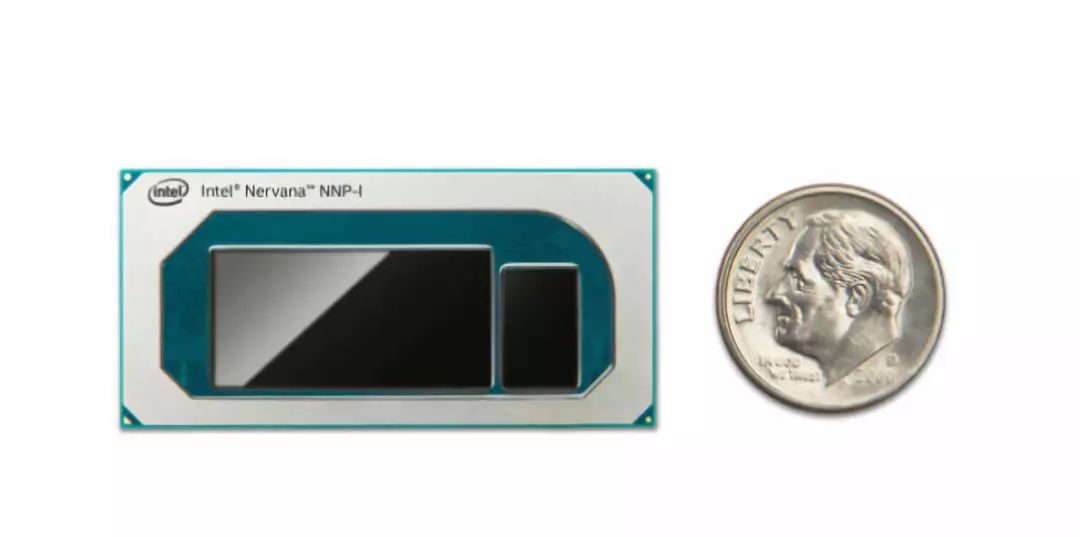

Intel Nervana inference chip

In some cases, Intel no longer produces all chips independently. This epoch-making change deviates from the company’s traditional practices. Now, if the chip designer thinks that other companies can do a chip production better than Intel and more efficiently, Intel will outsource its production. For example, the new chip for AI training is manufactured by TSMC.

Intel outsources some chip manufacturing for business logic and economic considerations. Because of Intel ’s state-of-the-art chip manufacturing process, which has capacity constraints, many customers can only wait for shipments of new Xeon CPUs. Therefore, Intel outsources the production of some other chips to other manufacturers. Earlier this year, Intel sent a letter to customers apologizing for delays in chip shipments and announced plans to catch up.

All these changes challenge Intel’s long-standing beliefs, adjust the company’s focus, and rebalance the old corporate power structure.

During this transition period, Intel ’s performance looks very good. Analyst Mike Feibus said that Intel ’s traditional PC chip sales business was down 25% from five years ago, but sales of Xeon processors for data centers are “shadowing”.

Some Intel customers already use Xeon processors to run AI models. If the workload goes up, they may consider adding new Nervana-specific chips. Intel expects that the first customers of Nervana chips will be “”Very large-scale users” or technology giants operating large data centers, such as Google, Microsoft, Facebook, etc.

Intel missed the mobile revolution, and it is not new to sacrifice the smartphone processor market to Qualcomm. But in reality, mobile devices have become service vending machines, delivered to your phone through a cloud data center. So when you watch streaming video on a tablet, Intel’s chip is likely to help you.

The advent of the 5G era may make real-time services possible, such as playing games in the cloud. A pair of futuristic smart glasses may be able to connect to the data center algorithm with super fast speed, and immediately recognize the object.

All these have come together in a very different era, far from the technological world built around PCs using Intel chips. However, as AI models become more complex and versatile, Intel has the opportunity to become the best company to drive them, just as they have driven our PCs for nearly half a century.

Reference:

https://www.fastcompany.com/90439144/machine-learning-could-transform-medicine-should-we-let-it

At present, it is mainly large technology companies with data centers that use AI technology in their main businesses, some of which provide AI as a cloud service to enterprise customers, such as Amazon, Microsoft and Google. However, AI has begun to spread to other large enterprises, which train AI models to analyze massive data and take corresponding measures.

This transformation requires great computing performance to support it, and AI’s “craving” for computing performance is exactly the rise of AI and the collision of Moore’s Law.

For decades, Moore’s prediction in 1965 was significant for the entire technology industry. Hardware manufacturers and software developers have traditionally linked their product roadmaps to the performance they will get from next year’s CPUs. It can be said that Moore’s Law makes everyone “dance the same dance music”.

Intel co-founder Moore (right)

Moore’s Law also heralds Intel’s promise of chip performance improvements every year. For most of the past, Intel has fulfilled this promise by finding ways to add more transistors to silicon wafers, but it has become increasingly difficult.

“Chip factories are about to lose our ability to provide performance improvements,” said Patrick Moorhead, principal analyst at market research firm Moor Insights & Strategy. . “

Although it is still possible to add more transistors to the silicon wafer, the cost is getting higher and longer, and the performance gains may not be enough to meet the needs of computer scientists to build neural networks. For example, the largest neural network known in 2016 has 100 million parameters, and the largest neural network so far in 2019 has 1.5 billion parameters, which increased by an order of magnitude in just a few years.

Compared with the previous computing paradigm, AI presents a very different growth curve, which pressures Intel to find ways to improve the processing performance of its chips.

However, Swann sees AI more as an opportunity rather than a challenge. He acknowledged that data centers may be Intel’s main benefit market because companies need powerful chips for AI training and inference, but he believes that Intel also has more opportunities to sell AI-compatible chips such as smart cameras and sensors to small devices. For these devices, what sets them apart is their small size and low power consumption, not the original performance of the chip.

“I think we need to speed up development on three technologies: one is AI, one is 5G, and the other is an automation system equivalent to a mobile version of the computer.” Swan said that he is based in Brian (Krzanich) took over as Intel CEO last year after leaving due to extramarital affairs.

Intel CEO Swan

In an ordinary large conference room at Intel’s headquarters, Swan divided Intel’s business into two columns on a white board in front of the conference room. The left column is the PC chip business, which currently accounts for half of Intel’s revenue; the right column is the data center business, including the emerging Internet of Things, self-driving cars and network equipment markets.

“The world we enter requires more and more data, which requires stronger processing, storage, and inspection.Search capabilities, faster data movement, analysis and intelligence to improve data relevance. “Swan said.

Swan is no longer fighting for a 90% share in the $ 50 billion data center market. Self-driving cars and network equipment. He described this strategy as “starting with our core competitiveness and then inventing in some areas, but at the same time expanding our existing business.”

This may also be a way for Intel to quickly get out of the shadow of failed smartphone chip attempts. The company recently abandoned its large-scale investment in smartphone baseband and sold it to Apple. In the field of smart phone chips, Qualcomm’s long-term dominance is like Intel dominating the PC chip market.

By 2023, the IoT market is expected to reach $ 2.1 trillion, which includes chips for robots, drones, cars, smart cameras, and other mobile devices. Although Intel’s share of the IoT chip market is growing at a double-digit rate year-on-year, the share of IoT in Intel’s total revenue is still only 7% today.

Data center is Intel’s second largest business, contributing 32% of the company’s revenue, second only to the PC chip business (accounting for 50% of revenue). If you say which business is most affected by AI, the data center is the first. This is why Intel has been adjusting its strongest CPU series Xeon, the purpose is to make it suitable for processing machine learning tasks.

In April of this year, Intel added deep learning acceleration technology (DL Boost) to the second-generation Xeon CPUs, which provides stronger performance for neural networks while neglecting the loss of accuracy. For the same reason, Intel will start selling two chips that are good at running large machine learning models next year.

AI revival highlights chip short board

By 2016, the prospect that neural networks will be used in various applications has become clear, from product recommendation algorithms to natural language processing for customer service robots. Like other chip makers, Intel knows that the company must provide its large customers with a hardware and software chip specifically designed for AI. This chip will be used to train AI models and make inferences from massive data.

At that time, Intel was missing such a chip. The industry believes that Intel’s Xeon processor is very good at analysis, but the AI graphics processor (GPU) produced by Intel rival NVIDIA is more suitable for training AI models. This is an important perspective that has affected Intel’s business.

So Intel started the acquisition in 2016, buying a deep learning chip company called Nervana for $ 400 million, which is already developing ultra-fast chips designed to train AI.

Three years have passed. Looking back, this seems to be IntelWise move. At an event in San Francisco this November, Intel announced two new Nervana neural network processors, one designed to run neural network models to infer meaning from large amounts of data, and the other to train neural networks. Intel worked with two major customers, Facebook and Baidu, to help verify its chip designs.

Nervana CEO Rao

Nervana is not the only Intel acquisition in 2016. In the same year, Intel also acquired another company, Movidius, which has been developing small chips that can run computer vision models inside devices such as drones or smart cameras. Intel’s Movidius chip sales are not high, but have been growing rapidly and have opened up the IoT market that Swan is excited about. At the San Francisco event, Intel also announced the new Movidius chip, which will be introduced to the market in the first half of next year.

Nervana founder and CEO Naveen Rao said that many Intel customers are engaged in AI computing at least to some extent in conventional Intel CPUs used by data center servers, but they must work together to meet neural networks Demand is not easy. On the other hand, Nervana chips include multiple connections so they can easily collaborate with other processors in the data center.

“Now, I can call up my neural network and divide them into multiple small systems that can work together,” Rao said, “so we can have the entire server rack, or four racks, Solve a problem together. “

In 2019, Intel expects to generate $ 3.5 billion in revenue from AI-related products. Currently, only a few Intel customers are using Nervana chips, but its user base is likely to expand significantly next year.

Long-term chip concept change

The launch of the Nervana chip represents Intel’s deep-rooted belief that the chip giant used to believe that one CPU can handle all the computing tasks that a PC or server needs to do. This ubiquitous belief changed with the game revolution, which required extreme computing power to display complex graphics.

It is reasonable to leave the graphics processing to the GPU so that the CPU does not need to take on this part of the task. Swan saidA few years ago, Intel started integrating GPUs in CPUs, and will release independent GPUs for the first time next year.

The same idea applies to AI models. In the data center server, a certain amount of AI tasks can be processed by the CPU, but as the amount of tasks increases, it is more efficient to transfer it to another dedicated chip. Intel has been investing in the design of new chips that integrate the CPU with a series of dedicated acceleration chips to meet customer performance and workload requirements.

“When you design a chip, you need to use the power of the system to solve the problem, which often requires more chips, not a CPU can do.” Swan said.

In addition, Intel now relies more on software to push the performance and power of its processors to new levels, which has changed the balance within Intel. An analyst said that at Intel, software development is currently “on an equal footing” with hardware development.

Intel Nervana inference chip

In some cases, Intel no longer produces all chips independently. This epoch-making change deviates from the company’s traditional practices. Now, if the chip designer thinks that other companies can do a chip production better than Intel and more efficiently, Intel will outsource its production. For example, the new chip for AI training is manufactured by TSMC.

Intel outsources some chip manufacturing for business logic and economic considerations. Because of Intel ’s state-of-the-art chip manufacturing process, which has capacity constraints, many customers can only wait for shipments of new Xeon CPUs. Therefore, Intel outsources the production of some other chips to other manufacturers. Earlier this year, Intel sent a letter to customers apologizing for delays in chip shipments and announced plans to catch up.

All these changes challenge Intel’s long-standing beliefs, adjust the company’s focus, and rebalance the old corporate power structure.

During this transition period, Intel ’s performance looks very good. Analyst Mike Feibus said that Intel ’s traditional PC chip sales business was down 25% from five years ago, but sales of Xeon processors for data centers are “shadowing”.

Some Intel customers already use Xeon processors to run AI models. If the workload goes up, they may consider adding new Nervana-specific chips. Intel expects that the first customers of Nervana chips will be “”Very large-scale users” or technology giants operating large data centers, such as Google, Microsoft, Facebook, etc.

Intel missed the mobile revolution, and it is not new to sacrifice the smartphone processor market to Qualcomm. But in reality, mobile devices have become service vending machines, delivered to your phone through a cloud data center. So when you watch streaming video on a tablet, Intel’s chip is likely to help you.

The advent of the 5G era may make real-time services possible, such as playing games in the cloud. A pair of futuristic smart glasses may be able to connect to the data center algorithm with super fast speed, and immediately recognize the object.

All these have come together in a very different era, far from the technological world built around PCs using Intel chips. However, as AI models become more complex and versatile, Intel has the opportunity to become the best company to drive them, just as they have driven our PCs for nearly half a century.

Reference:

https://www.fastcompany.com/90439144/machine-learning-could-transform-medicine-should-we-let-it

The launch of the Nervana chip represents Intel’s deep-rooted belief that the chip giant used to believe that one CPU can handle all the computing tasks that a PC or server needs to do. This ubiquitous belief changed with the game revolution, which required extreme computing power to display complex graphics.

It is reasonable to leave the graphics processing to the GPU so that the CPU does not need to take on this part of the task. Swan saidA few years ago, Intel started integrating GPUs in CPUs, and will release independent GPUs for the first time next year.

The same idea applies to AI models. In the data center server, a certain amount of AI tasks can be processed by the CPU, but as the amount of tasks increases, it is more efficient to transfer it to another dedicated chip. Intel has been investing in the design of new chips that integrate the CPU with a series of dedicated acceleration chips to meet customer performance and workload requirements.

“When you design a chip, you need to use the power of the system to solve the problem, which often requires more chips, not a CPU can do.” Swan said.

In addition, Intel now relies more on software to push the performance and power of its processors to new levels, which has changed the balance within Intel. An analyst said that at Intel, software development is currently “on an equal footing” with hardware development.

Intel Nervana inference chip

In some cases, Intel no longer produces all chips independently. This epoch-making change deviates from the company’s traditional practices. Now, if the chip designer thinks that other companies can do a chip production better than Intel and more efficiently, Intel will outsource its production. For example, the new chip for AI training is manufactured by TSMC.

Intel outsources some chip manufacturing for business logic and economic considerations. Because of Intel ’s state-of-the-art chip manufacturing process, which has capacity constraints, many customers can only wait for shipments of new Xeon CPUs. Therefore, Intel outsources the production of some other chips to other manufacturers. Earlier this year, Intel sent a letter to customers apologizing for delays in chip shipments and announced plans to catch up.

All these changes challenge Intel’s long-standing beliefs, adjust the company’s focus, and rebalance the old corporate power structure.

During this transition period, Intel ’s performance looks very good. Analyst Mike Feibus said that Intel ’s traditional PC chip sales business was down 25% from five years ago, but sales of Xeon processors for data centers are “shadowing”.

Some Intel customers already use Xeon processors to run AI models. If the workload goes up, they may consider adding new Nervana-specific chips. Intel expects that the first customers of Nervana chips will be “”Very large-scale users” or technology giants operating large data centers, such as Google, Microsoft, Facebook, etc.

Intel missed the mobile revolution, and it is not new to sacrifice the smartphone processor market to Qualcomm. But in reality, mobile devices have become service vending machines, delivered to your phone through a cloud data center. So when you watch streaming video on a tablet, Intel’s chip is likely to help you.

The advent of the 5G era may make real-time services possible, such as playing games in the cloud. A pair of futuristic smart glasses may be able to connect to the data center algorithm with super fast speed, and immediately recognize the object.

All these have come together in a very different era, far from the technological world built around PCs using Intel chips. However, as AI models become more complex and versatile, Intel has the opportunity to become the best company to drive them, just as they have driven our PCs for nearly half a century.

Reference:

https://www.fastcompany.com/90439144/machine-learning-could-transform-medicine-should-we-let-it