The new model can more accurately update factual information and output sentences closer to the results written by humans.

Editor’s note: This article is from WeChat public account “Big Data Digest” (ID: BigDataDigest) , compile: Iris, Qian Tianpei.

Source: MIT

Wikipedia, as an open collaborative encyclopedia, is one of the top ten most popular websites in the world. Currently, Wikipedia has accumulated over a million entries.

As facts change, thousands of articles need to be updated daily. Editorial work involves extensions, major rewrites, or routine revisions such as updating data, dates, names and places. This task is now maintained by volunteers around the world.

Fortunately, a recent MIT study is expected to greatly reduce the maintenance pressure on volunteers.

At this year’s AAAI Artificial Intelligence Conference, several researchers at MIT proposed a text generation system that can accurately locate Wikipedia sentences and replace specific information in sentences with human-like writing.

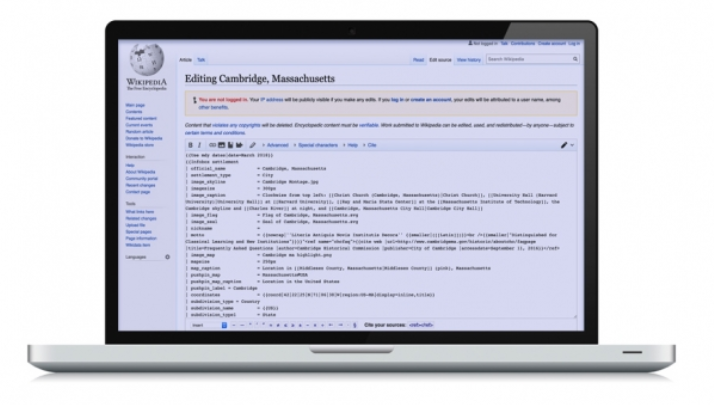

People only need to enter a short sentence on a certain interface to indicate that the information has changed. This system can automatically retrieve Wikipedia, locate specific pages and outdated sentences, and then rewrite the sentence in a human way.

Researchers also mentioned that in the future, a fully automated system can be constructed to identify and use the latest information on the Internet to generate sentences that need to be rewritten for Wikipedia sentences.

Darsh Shah, one of the co-authors of the paper, said a PhD student from the Computer Science and Artificial Intelligence Laboratory (CSAIL) said, “Wikipedia articles have been updated a lot, if they can be reduced or without human intervention. In the case of realizing automatic and accurate revision of articles, it would be very valuable. It no longer requires a lot of manpower to revise Wikipedia articles, only a few people can do it, because the model can be done automatically, which is huge Promotion.

In fact, there are already many other robots that can edit Wikipedia automatically. Shah mentioned that these tools are generally used to reduce destructive information or to remove narrowly defined information from predefined templates.

He pointed out that their new model solves a tricky problem of artificial intelligence: given a new unstructured information, the model automatically revises sentences like humans.

“Other robots use more rule-based methods, but automatic revision is to be able to identify contradictory parts of two sentences and generate coherent text.”

The paper co-author and CSAIL graduate student Tal Schuster mentioned that the system can also use other text generation applications. In the paper, researchers used popular fact-checking data sets to automatically synthesize sentences to reduce bias and eliminate the need to manually collect additional data. Schuster said this approach can improve automated fact-checking models, such as training datasets to detect fake news.

Shah, Schuster, Delta Electronic and Electrical Engineering and Computer Science Professor Regina Barzilay and a professor from CSAIL co-authored the paper.

“Neutral shielding”

Relying on a series of text generation technologies, the system is able to identify contradictory information of sentences and fuse two independent sentences together. Take the “outdated” and “declaration” sentences from Wikipedia articles as input, the declaration sentence contains update and conflict information. The system automatically deletes and retains specific words in obsolete sentences based on the statement sentence, and updates the fact of the sentence without changing the style and grammar. This is easy for humans, but challenging for machine learning.

For example, if you want to update “Federer has 19 Grand Slams” to “Federer has 20 Grand Slams.” According to the statement, find “Federer” on Wikipedia, replace the outdated data (19) with new data (20), and retain the sentence’s original structure and grammar. In their work, researchers used only a set of sentences from Wikipedia to run the system, without having to visit all the pages of Encyclopedia.

The system is trained on a popular dataset containing sentence pairs, each sentence pair containing a statement and another related Wikipedia sentence. Each sentence pair is marked with three states: agree, disagree, and neutral.

“Agree” means that the factual information contained between the sentences is consistent. “Disagree” represents a contradictory message between the two sentences. A “neutral” representative does not have enough information to determine whether to agree. After the system rewrites outdated sentences according to the statement, all sentences marked as disagreement will become the sameIntention state. This requires two independent models to get the desired results.

A model is a fact-checking classifier. When pre-training, each sentence needs to be identified as “agree”, “disagree”, and “neutral”. It is mainly used to find contradictory sentence pairs.

A custom “neutrality masker” module is also running with the classifier to identify which words in outdated sentences contradict the declaration sentence. This module deletes as few words as possible to achieve “maximum neutrality”, ie sentences can be marked as neutral.

In other words, if these words are blocked, the two sentences will no longer have contradictory information. We built a binary “masking” module for obsolete sentences, where 0 represents words that may need to be deleted, and 1 represents words that we agree to keep.

After masking, we use a “two-encoder-decoder” framework to generate the final output sentence. The model learns the characteristics of declarative and outdated sentences. At the same time, the “double encoding-decoding” process is used to fuse contradictory words in the statement: first delete the words that contain contradictory information in the outdated sentences (that is, the words marked as 0), and then fill the updated words.

In a test, the model’s test results surpassed all traditional methods. The test used a method called “SARI” to compare the differences between machine delete, add, and retain sentences and human revised sentences.

Compared with traditional text generation methods, the new model can update fact information more accurately and output sentences closer to the results written by humans.

In another test, the crowdsourcing staff scored the sentences generated by the model. They mainly scored the accuracy of fact updates and the degree of grammatical matching. The score range was 1 to 5. The average score of the model “Fact Update” is 4 points, and the average score of “Grammatic Match” is 3.85 points.

Data enhancement, eliminating biases

Research also shows that the system can use the augmented data set to train “fake news” discriminators, eliminating the training bias.

“Fake news” uses misleading information to mislead readers in order to gain more Internet browsing and generate public opinion.

A model for judging false information usually requires many “agree-disagree” sentence pairs as a data set.

In these sentence pairs, the statement either contains information that matches (agrees) the “evidence” sentence given by Wikipedia, or contains information that contradicts the evidence sentence (disagree) by manual revision. The model can be trained to mark sentences that contradict “evidence” as “errors” to identify false information.

Unfortunately, Shah believes these data sets must be biased. “During training, in the absence of sufficiently relevant ‘evidence’ sentences,Certain phrases also make it easy for models to find ‘vulnerabilities’. When evaluating real sentence examples, this will reduce the accuracy of the model and fail to play an effective verification role. “

Researchers used the same deletion and fusion techniques in the Wikipedia project to balance the “disagree-agree” pairs in the dataset to mitigate bias. For some “disagree” sentence pairs, they used to correct the wrong information in the sentence to regenerate a fake “evidence” for the sentence. If revealing phrases exist in both “agree” and “disagree” sentences, the model can identify more features. Using the enhanced data set, the study reduced the false discriminator error rate by 13%.

Shan emphasizes, “If there is a bias in your data set, the model tends to be distorted. Therefore, data enhancement is very necessary.”

Related reports:

https://www.csail.mit.edu/news/automated-system-can-rewrite-outdated-sentences-wikipedia-articles