In an inconspicuous office in Berlin, 600 employees, like cold machines, stare at the screen with a mouse click, but do something that affects 2 billion people around the world:

Do you have billions of posts in more than one hundred languages per day? What needs to be retained? What needs to be deleted immediately?

They are Facebook content auditors who make a decision almost every 10 seconds.

▲Vice Detective Facebook’s auditor work environment. Image courtesy of MAX HOPPENSTEDT

The majority of this group does not actually understand. They are not on the unfathomable dark net, nor are they close to netizens, but in recent months, University of California professor Sarah T. Roberts has A new book, “Behind the Screen” says:

The darkest side of social media, not widelyThe screen of the big netizen, and in front of the content auditor.

▲ Image from: “Network Scavenger” screenshot

1 day review 25000 items behind

Hello, the *** you posted has been removed because it contains content that violates relevant laws, regulations or regulations.

When the content you posted on Weibo, B, and Douban cannot be reviewed or suddenly deleted, the content auditor is at work.

The major Internet companies at home and abroad are expanding their positions every year. Facebook alone has more than 20,000 auditors around the world, which seems to be a career that is always missing.

In the early days, the Internet was still a fragmented network, connecting people with billions of websites and communities, and as the Internet became more “corporate,” Facebook, YouTube, Instagram… Content auditors are starting to appear to monitor their respective website.

So in a nutshell, a content auditor is someone who deletes content on the Internet that is suspected of violating the rules. However, the first impression it brings to people is generally “the yellow master.”

“Yellow” is right, but it’s just part of the job. Although many people think it’s an extra benefit, it’s not as simple as people think.

In a “Network Scavenger” documentary, Facebook’s content auditor’s day’s processing index is 25,000. All of these contents are child pornography, terrorism, self-harm Sadness, extreme organizational speech… Their duty is to keep the most dirty and disgusting information out of the world we can see.

▲ Image from: Screenshot of “Network Scavenger”

Stimulation, depression, anger, indifference, moral condemnation… Mixed emotions, constantly refreshing the senses, challenging the limits of tolerance. But this is not a roller coaster. After the tumbling, you can return to the ground. Every new person has to be in the car. It can only change from the original stimulation to the numbness after the habit.

To do this, you have to be a strong person and know yourself very well.

▲ Image from: “Network Scavenger”

According to the employees of an Internet company in China, their daily work, except for some extreme situations, can be said to be repeated or even boring most of the time:

A single work environment.

Staring at the screen all day, the day and night are disordered.

From thousands of selfies, vacation photos, and vulgar videos, we focus on searching for pornography and politics, as well as a small number of posts on gambling, usury, and selling drugs.

Delete, look again, delete again, then look.

▲Although it is a famous photo of Vietnam War, it will also be deleted because of “defending the nude photos of minors”

In addition, their average salary is higher than the minimum wage. The content review rules are very vague and often change. Most of them rely on established models and long-term experience to grasp the scales, but sometimes they look at the commands and sometimes look at the boss’s orders. Every month, people come and some people leave, and the company never leaves people.

It’s just that a group of ordinary people suddenly came into contact with the taboo world. Because of the bloody and cruel content, the pressure is getting bigger and bigger, and the accumulated depreciation begins to break.

In 2017, a Facebook class action lawsuit, Thousands of content auditors said they were seriously psychologically injured at work, leading to post-traumatic stress disorder. “A little bit of sleep will make me the illusion of a car accident” .

But this is an indispensable and vital job: the network scavenger is the security guard of the Internet. If they don’t clean up the prohibited content and don’t block the illegal information, there will be more teenagers and children. see.

Google, Facebook and other big companies value them very much, and now they have almost hundreds of thousands of people, although they also provide mental health counseling and inspection for auditors, butalso recognizes, content auditors are the most in the tech world One of the work of psychological trauma.

Whether this “sad job” is likely to be solved in a better way, even humans can no longer do such work?

Content auditor that cannot disappear

In fact, the current content review can only be done by people – the game of cat and mouse.

But the good news is that there are still AIs that can help them pre-empt a “snake.”

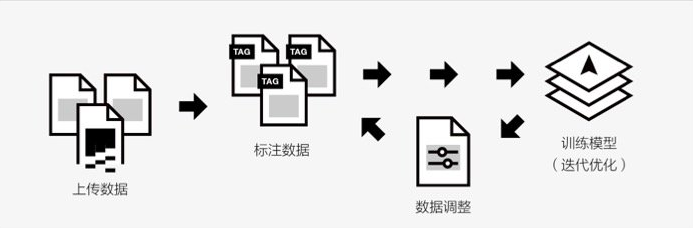

With the repeated complaints and protests from Internet company auditors, and the increase in the amount of content uploaded by Internet users, the amount of UGC content generated by the head platform every day is astronomical, and the human input is expensive and difficult to expand, so now almost All companies,The content review method of “People + Machine” is already in use.

▲ Yahoo! has open sourced a deep learning neural network that can evaluate a NSFW (Not Suitable/Safe For Work) value. The most harmless is 0, the most extreme is 1

Tuptech, which was the first to use AI technology for content review services, is said to have achieved 99% recognition rate for pornography, trepidation, vulgarity, fraud, etc., and reduced manual audit costs by more than 90%.

In the review of man-machine combination, the first AI will conduct the first stage of mass content review, distinguishing between normal content, determined bad content and ambiguous content, and then the posts are classified and assigned, and then the auditor will Manual review for ambiguous content.

This way, AI can help content auditors reduce the burden, allowing them to make decisions more efficiently, focusing on data analysis.

But letting artificial intelligence replace manpower is still like a fantasy.

Although AI technology is developing faster and faster, because of the difficulty of natural language processing (NLP), the machine can’t reach the level of human thinking in understanding the language, so the review text will also be mistaken, let alone the picture, audio. ,video.

The limitation of deep learning is that if you want to create an accurate test Neural network of adult content must first allow AI to train on millions of annotated adult content. Without high-quality training data, AI can easily make mistakes.

▲ Image from: Screenshot of “Network Scavenger”

AI is good at identifying pornography, spam, and fake accounts, but because of the large amount of reviews, many categories, and blurred borders, like female breastfeeding, menstrual art, and war atrocities, they will also be

After all, the machine is “fed” by people,

In terms of hate speech and harassment, the accuracy of recognition is very poor. Facebook thinks it may take 5 to 10 years for AI to discover hate speech.

▲ Image from: MICHELLE THOMPSON

Tongpu Technology CEO Li Mingqiang also said in an interview with Ai Faner:

The fact that I have to admit is that at this stage, AI is still in the stage of weak artificial intelligence. Only in the future, AI has the ability to learn independently and think independently, to achieve human rational and emotional judgment, that is, the stage of strong artificial intelligence.Only when there is a possibility of replacing manpower.

Therefore, content auditors will not disappear for a long time to come.

In this long-term future, it is foreseeable that as the amount of information spreads, the image and video styles are constantly being refurbished,

▲ Image from: CONTENTBOT.NET

In the future, the network environment will not necessarily be more “green and healthy.”

Network yellow trespassing that cannot disappear

Like the content auditor, the yellow storm may also have been “surviving” online.

In this moment, which is easier to express freely than ever before, everyone sends out their own voice, but this freedom belongs to everyone. It is difficult for us to guarantee that the freedom of others will not hurt ourselves. This is the price that will inevitably occur. .

▲ Image from: SETH LAUPUS

At the same time, as the competition in the Internet content industry entered the second half, the traffic disputes between the various families became more and more fierce.

The content auditor of each platform, in addition to deleting the prohibited content, will consciously put only the content that is beneficial to the platform, thus creating a unique brand style of its own platform, which is also a form of brand protection.

But what all platforms have in common is that they all need to survive the attention of the user.

▲ Image from: SHUTTERSTOCK

In order to make users addicted, these companies will use more precise data algorithms and manual selection to let people stay on the platform for a longer period of time, so that people want to see more and more, but: yellow, violence, It is precisely the location of human sexual desire.

In one network hotspot after another, it can be seen that human instinct likes to constantly refresh the bottom line, challenge the taboo, incite the opposite, create angry content, just the social platform wants to keep the green healthy and bright. Environment – This is a contradiction that can never be resolved.

▲ Image from: Screenshot of “Network Scavenger”

Under this kind of conflict, the major platforms seem to be only in danger.

For netizens, although the content we saw was chosen or customized, and it would make us unable to understand the deep ugly truth, but more importantly, in any case, Both have the ability to criticize and think.

Compared with the bottomless line of bullying, crime, humiliation, and reactionary throughout the whole network, the flame of disaster is annihilated at the source.

Content AuditorIt can’t disappear, and the “yellow tyranny” can’t disappear. We can only make the technology more advanced and make the rules clearer. Otherwise, the Internet may go towards the extremes that cannot be turned back.

▲ Image from: TIN VARSAVSKY/FLICKR

But in any case, the most important thing for us, as Zuckerberg said at a press conference < /a>:

We need the courage to choose hopes, not fears, to declare that we can make the world a better place. We must remain optimistic, and it is this hope and this optimism that have made a great progress. More importantly, we need to work together.

After all, comparing the abyss of reality, the cruelty that the content auditors see is just the tip of the iceberg.