According to the analysis of Emergen Research, the global deep learning market size is expected to reach USD 93.34 billion at a steady CAGR of 39.1% by 2028, and the key factors driving its market revenue are the adoption of cloud-based technologies and in big data analytics Use deep learning systems.

So, what exactly is deep learning? How does it work?

According to “VentureBeat” in the recent article “This is why deep learning is so powerful”, deep learning is a subset of machine learning that uses neural networks to perform learning and prediction. Deep learning has shown amazing performance in a variety of tasks, be it text, time series, or computer vision. The success of deep learning mainly comes from the availability and computing power of big data, which enables deep learning to perform far better than any classical machine learning algorithm.

The essence of deep learning: neural networks and functions

Some netizens once joked, “When you want to fit any function or any distribution, And when you have no idea, try neural network!”

First two important conclusions:

A neural network is a network of interconnected neurons, each of which is a finite function approximator. In this way, neural networks are treated as universal function approximators.

Deep learning is a neural network with many hidden layers (usually greater than 2 hidden layers). Deep learning is the complex composition of functions from layer to layer to find functions that define the mapping from input to output.

In high school math we will learn that a function is a mapping from input space to output space. A simple sin(x) function is from angular space (-180° to 180° or 0° to 360°) Mapping to the real number space (-1 to 1). The problem of function approximation is an important part of function theory, and the basic problem involved is the approximate representation of functions.

In high school math we will learn that a function is a mapping from input space to output space. A simple sin(x) function is from angular space (-180° to 180° or 0° to 360°) Mapping to the real number space (-1 to 1). The problem of function approximation is an important part of function theory, and the basic problem involved is the approximate representation of functions.

Then, Why are neural networks considered universal function approximators?

Each neuron learns a finite function: f(.)=g(W*X) where W is the weight vector to learn, X is the input vector, g(.) is the nonlinear transformation. W*X can be visualized as a line in a high-dimensional space (hyperplane), and g(.) can be any non-linear transformation Linear differentiable functions, such as sigmoid, tanh, ReLU, etc. (commonly used in the field of deep learning).

Learning in a neural network is nothing more than finding the best weights Vector W. For example, in y=mx+c, we have 2 weights: m and c. Now, based on the distribution of points in a 2D plane space, we find the best values of m and c that satisfy some criteria, Then for all data points, the difference between the predicted y and the actual point is minimal.

Neural network “layer” effect: learning a mapping specific to class generalizations

If the input is an image of a lion, the output is an image belonging to the class of lions classification, then deep learning is learning a function that maps image vectors to classes. Similarly, the input is a sequence of words and the output is whether the input sentence has positive/neutral/negative sentiment. So deep learning is learning a mapping from input text to output classes: neutral or positive or negative.

How to achieve the above tasks?

Each neuron is a non-linear function, we stack several such neurons in a “layer”, each neuron receiving the same Group inputs but learn different weights W. Therefore, each layer has a set of learned functions: f1,f2,…,fn, called hidden layer values. The values are combined again, in the next layer: h(f1,f2,…,fn) and so on. In this way, each layer consists of the function of the previous layer (similar to h(f(g(x)))). It has been shown that with this combination we can learn any nonlinear complex function.

Deep learning is a neural network with many hidden layers (usually greater than 2 hidden layers). Deep learning is the complex composition of functions from layer to layer to find functions that define the mapping from input to output.

Deep Learning as Curve FittingInterpolation: Overfitting Challenges and Generalization Goals

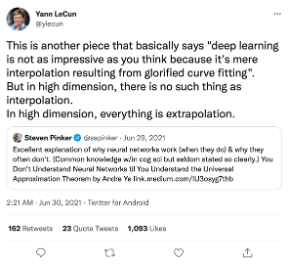

Deep Learning Pioneer Yann LeCun (creator of Convolutional Neural Networks and Turing Award winner) once tweeted, “Deep learning is not as amazing as you might think because it’s just interpolation that glorifies curve fitting. But in In higher dimensions, there is no such thing as interpolation. In higher dimensions, everything is extrapolation.”

Interpolation is a discrete function An important method of approximation, which can be used toThe value conditions at a limited number of points are estimated, and the approximate value of the function at other points is estimated.

From a biological interpretation, humans process images of the world by interpreting images layer by layer, from low-level features such as edges and contours, to high-level features such as objects and scenes . Consistent with this is the combination of functions in neural networks, where each combination of functions is learning complex features about the image. The most common neural network architecture used for images is CNN (Convolutional Neural Networks), which learns these features in a hierarchical fashion, and then a fully connected neural network classifies the image features into different categories.

For example, given a set of data points on a plane, we try to interpolate to fit a curve that somehow represents the function that defines those data points . The more complex the function we fit (eg in interpolation, determined by a polynomial degree), the better it fits the data; however, the less it generalizes to new data points.

This is where the challenge of deep learning comes in, commonly known as the overfitting problem: fitting the data as much as possible, but compromising on generalization . Almost all deep learning architectures have to deal with this important factor in order to learn general functions that perform equally well on unseen data.

How does deep learning learn? The problem determines the neural network architecture

So how do we learn this complex function?

It all depends on the problem at hand, which dictates the neural network architecture. If we are interested in image classification then we use CNN. If we are interested in time related prediction or text then we use RNN (Recurrent Neural Network) or Transformer, if we have dynamic environment (like car driving) then we use reinforcement learning.

Beyond that, learning involves dealing with different challenges:

By using regularization , used to prevent overfitting and underfitting of the trained model) processing ensures that the model learns a general function, not just the training data.

· Depending on the problem at hand, choose a loss function. Roughly speaking, the loss function is the error function between what we want (the true value) and what we currently have (the current prediction).

Gradient descent is the algorithm used to converge to the optimal function. Deciding on the learning rate becomes challenging because when we are far from the optimal we want to go faster optimal, and as we get close to optimal, we want to be slower to make sure we converge to the optimal and global minimum.

· Lots of hidden layers to deal with Vanishing gradient problem. Architectural changes like skip connections and proper non-linear activation functions can help with this.

Based on Neural Architectures and Big Data: Deep Learning Brings Computational Challenges

Now we know that deep learning is just a complex function of learning, it brings other computing challenges:

To learn a complex function, we need a large amount of data; in order to process big data, we need a fast computing environment; therefore, we need a Infrastructure to support this environment.

Parallel processing with the CPU is not enough to compute millions or billions of weights (also known as DL’s parameters). Neural networks need to learn weights that require vector (or tensor) multiplication. This is where GPUs come in handy because they can do parallel vector multiplication very fast. Depending on the deep learning architecture, data size, and the task at hand, we sometimes need 1 GPU, and sometimes data scientists need to make decisions based on known literature or by measuring the performance of 1 GPU.

By using an appropriate neural network architecture (number of layers, number of neurons, nonlinear functions, etc.) and sufficiently large data, a deep learning network can learn from a vector space Any mapping to another vector space. This is what makes deep learning a powerful tool for any machine learning task.