This article comes from the 36 氪 compilation team “Translation Bureau”, translator boxi, Ai Faner authorized release.

Artificial intelligence has been around for more than 60 years, and some of the largest technology companies in the United States (Amazon, Microsoft, Google, Facebook, etc.) are just beginning to tap into the potential of AI and try to figure out how artificial intelligence will change. our future. This article is the first in a series of articles by Fast Company, “The New Rules for AI,” which introduces Amazon’s machine vision service Rekognition. The original author is Mark Sullivan, titled: Amazon’s AI is democratizing the war on NSFW user-generated content

Now, Amazon’s controversial Rekognition computer vision technology has a new use: to remove indecent photos from food sites.

Okay, at least there is an example. London-based delivery service Deliveroo is facing a tough content review challenge. There seems to be a problem with their ordering function, and Deliveroo customers often send photos of food along with complaints. They often use indecent photos to launch photo bomb attacks on food they have eaten. Or, they will arrange the food into a private shape. Really.

It turns out that Deliveroo employees don’t necessarily want to see something similar. As a result, the company used Rekognition to identify indecent photos and blur or delete them before they were seen.

Deliveroo’s problem reflects the bizarreness of an increasingly serious problem. Many Internet companies are focusing on UGC (user-generated content) in one way or another. In recent years, we have increasingly seen the dark side of humanity that has been revealed. As websites become increasingly hotbeds of fake news, violent content, toxic UGCs such as counterfeit and shoddy goods, bullying, and hate speech, content review has become a top priority. If you are as crude as Facebook, you can develop your own AI or hire a large number of auditors (or both) to deal with this chaos. However, SMEs with fewer resources do not have this option. Amazon’s content review service comes from this.

This service is Rek for Amazon Web ServicesThe ognition is part of the computer vision service, which in itself is the subject of many bad news, as Amazon seems willing to provide face recognition services to the US Immigration and Customs Enforcement. You can find other monitoring-oriented application cases on the Rekognition website, for example, it can read license plates from different shooting angles, or track the walking path of people captured by the camera.

Amazon may be looking for a more positive exposure to its computer vision service, so this is the first time it has talked about using Rekognition to monitor obscene or violent images in UGC. The Rekognition Content Review Service detects unsafe or offensive content from images and videos uploaded to the company’s website.

This is a growth business. Swami Sivasubramanian, vice president of Amazon AI, said: “The role of UGC is growing year by year, because we now share two or three pictures with friends and family on social media every day.” Sivasubramanian said that Amazon should start in 2017. Some customers request content review services.

Companies can pay to buy Rekognition services so they don’t have to hire people to check user-uploaded images. Like other AWS services, the content review service uses a pay-as-you-go model and is priced based on the number of images processed by the Amazon Rekognition neural network.

Unsurprisingly, the first users of the content management service included dating and matchmaking sites that faced the challenge of quickly approving self-portraits uploaded to the user’s profile. Amazon said that the matchmaking sites Coffee Meets Bagel and Shaadi use Rekognition for this purpose. The Portuguese website Soul, which helps others create dating sites, is also used.

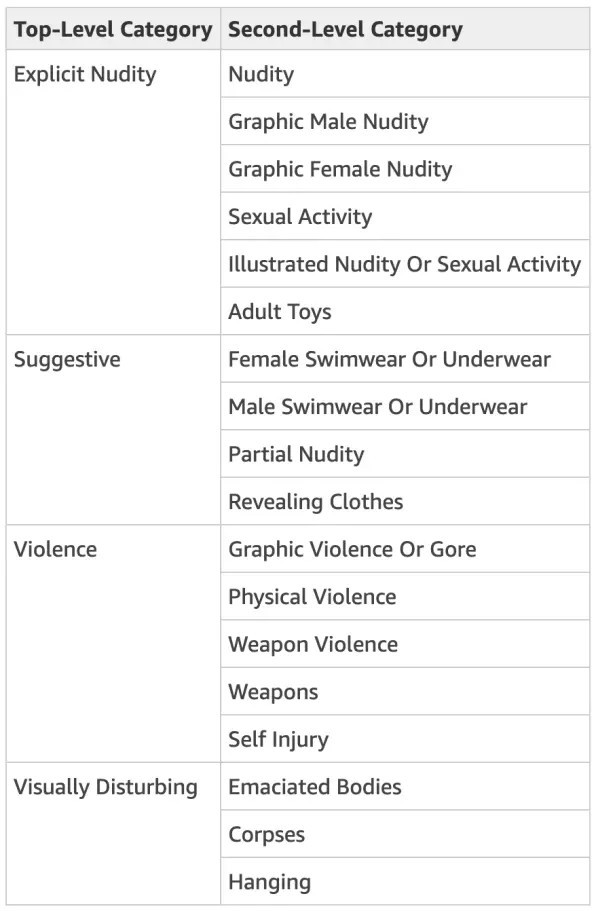

This AI is not just looking for nudity. Rekognition’s neural network has been trained to detect suspicious content, including guns or violent images or generally disturbing content. Below is a breakdown of the features on the Rekognition website:

▲ Image Source: Amazon

Working Mechanism

Like all of AWS’s other content, Rekognition’s new content review feature is also running in the cloud. The company used can tell the service what type of problem image it wants to detect. It then sends user-generated photos and videos (which in many cases are usually stored on AWS) to the Rekognition service.

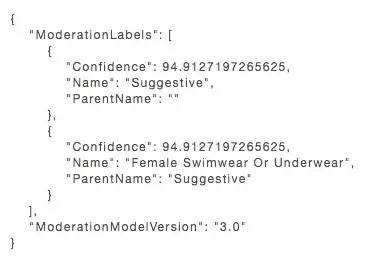

Then Amazon’s Deep Neural Network processes the image, discovering it and marking any potentially objectionable image types. The neural network outputs metadata about the image content and a percentage of the confidence that the image is tagged. The result looks like this:

This code is then sent to the client’s software, which determines how the tagged image is processed based on the programmed business rules. The software may automatically delete a given image, pass it through or partially or obfuscate it, or send it to humans for review.Check and so on.

The deep neural network for image processing has many layers. Each layer evaluates the data points representing different aspects of the image, calculates them, and sends the results to another layer of the network. The network will first process the top-level information, such as the basic shape inside the image and whether or not someone is there.

Sivasubramanian explains: “Then it will slowly refine and refine, and each step will get more and more specific information.” Slowly, the neural network can continually clarify what is in the image. .

Mat Wood, vice president of AWS AI, said his team used millions of proprietary and publicly available image sets to train its computer vision models. He said that Amazon did not use any customer images in training.

Frame-by-frame processing

Some of the largest Rekognition content management customers have not used this service to audit UGC. According to Amazon, media companies with large digital video libraries need to know the video content of each frame. Rekognition’s neural network can process every second of video content, describe it with metadata, and mark potentially harmful images.

Wood said: “There is one thing machine learning is really good, it is to analyze the video or image and then provide additional context. It might say, “This is a video of a woman walking with a puppy at the lake.” “Or, “There is a man wearing clothes in this video,” he said. In this usage, the neural network can detect dangerous, toxic or defamatory content, and the accuracy is extremely high.

▲ Image source: AmAzon

Nevertheless, the computer vision science of this branch has not yet reached maturity. Scientists are still looking for new ways to optimize neural network algorithms to identify images more accurately and in more detail. Wood said: “We have not yet reached the time of diminishing returns.”

Sivasubramanian said that just last month, the computer vision team had reduced the false positive rate (falsely marking the image as potentially unsafe or objectionable) by 68%, reducing the false negative rate. 36%. He said: “The accuracy of these APIs has room for improvement.”

In addition to accuracy, customers have been asking for more detailed information about image classification. According to the AWS website, the AWS Content Auditing Service returns primary and secondary classes only for unsafe images. So the system may classify the image as the main class as containing nudity, while the secondary category is inclusive. The third level classification may include a classification for the type of sexual behavior displayed.

Petro Perona, a professor of computing and nervous systems at the California Institute of Technology, said: “Now, the style of the machine is very realistic, it will tell you “there are these things inside.” But scientists hope to do more than limit it. Therefore, they also hope that the machine can tell what these people are thinking and what is happening. This is the direction of development in the field, not just what is listed in the image like a shopping list.”

The subtle differences are critical to content review. Whether an image contains potentially objectionable content may depend on what the person’s intentions in the image are.

Even the definition of “unsafe” or “disgusting” is also an ever-changing goal. It will change over time and will vary in different places. Perona explained that context is everything. Violent pictures are a good example.

Perona said: “Violence may be unacceptable under certain circumstances, such as real-life violence in Syria, but in another case it is acceptable, for example in a football match or in Kun Those in Ting Tarantino’s film.”

Like many AWS services, Amazon not only sells Rekognition’s content review tool to others: it’s also its own customer. The company said it is using the service to monitor user-generated images and videos that have been submitted with user reviews in their own markets.