Now let’s analyze the algorithm. AI algorithm to artificial intelligence is the relationship between chef and delicious food. With the development of AI in the past 10 years, data and computing power have played a very good supporting role, but it is undeniable that the performance breakthroughs achieved by deep learning algorithms combined with their applications are the milestone development stages of AI technology in 2020. Important reason.

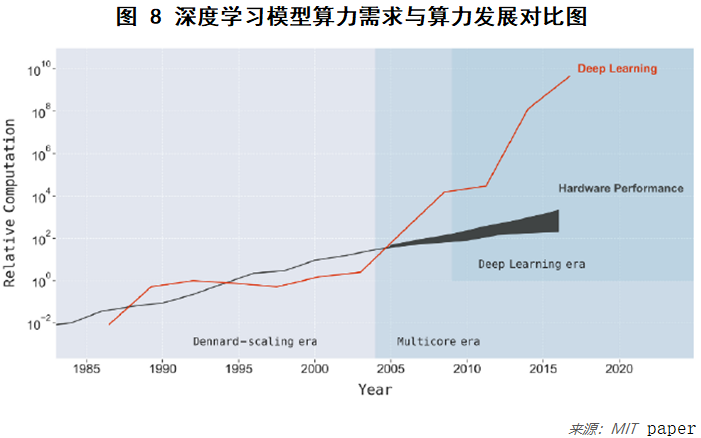

So, what is the future development trend of AI algorithms? This issue is one of the core issues discussed in the academic and industrial circles. A general consensus is that the continuation of the development of AI technology in the past 10 years has benefited from deep learning, but the computing power problems caused by the development of this path are more difficult. continued. Let’s look at a picture and a set of data:

1. According to the latest calculations of OpenAI, the computing power of training a large AI model has increased by 300,000 times since 2012, that is, an average annual increase of 11.5 times, and the hardware growth rate of computing power is Moore’s Law , Only reached an average annual growth rate of 1.4 times; on the other hand, the improvement of algorithm efficiency, the annual average savings of about 1.7 times of computing power. This means that as we continue to pursue the continuous improvement of algorithm performance, there will be an average of about 8.5 times the computing power deficit every year, which is worrying. A practical example is the newly released natural semantic pre-training model GPT-3 this year. The training cost alone has reached about 13 million US dollars. Whether this method is sustainable is worth thinking about.

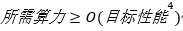

2. The latest MIT research shows that for an AI model that is over-parameterized (that is, the number of parameters is more than the training data sample), it satisfies a theoretical upper limit formula:

The above formula shows that the computing power requirement is ideally greater than or equal to the 4th power of the performance requirement. The performance of the model from 2012 to the present is analyzed on the ImageNet data set, and the reality is fluctuating up and down at the 9th power level. , Which means that the existing algorithm research and implementation methods have a lot of room for optimization in efficiency.

3. According to the above data calculation, it is estimated that the artificial intelligence algorithm will cost 100 million trillion (10 to the 20th power) U.S. dollars to achieve a 1% error rate in the image classification task (ImageNet), and the cost is unaffordable.

Combine the data and calculations described above