herein from “New York Times” by All Media Party compiled, love Fan Er is authorized to release.

Recently, MIT Ph.D. students found in two independent studies that although machines are good at identifying texts generated by artificial intelligence, it is difficult to tell the truth. The reason is that the database of training machines to identify fake news is full of human prejudice. Therefore, the artificial intelligence trained is inevitably brought to a stereotype.

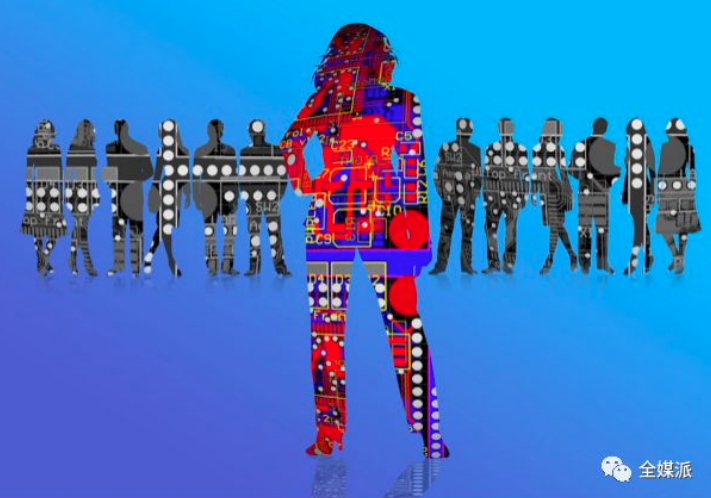

Human prejudice is a widespread depression in the field of artificial intelligence. The ImageNetRoulette Digital Arts project reveals this serious problem by using AI analysis to describe user-uploaded images. This issue of the full media (ID: quanmeipai) exclusively compiles the New York Times’s comments on the ImageNetRoulette project, presenting the “invisible bias” behind artificial intelligence.

One morning, when netizen Tabong Kima was tweaking, he saw a real-time hot search called #ImageNetRoulette.

In this hot search, users upload self-portraits to a website, and then artificial intelligence analyzes and describes each face it sees. ImageNetRoulette is a website that defines a male as “orphan” or “non-smoker”. If it is wearing glasses, it may be labeled “nerd, idiot, freak”.

▲A Twitter user uploaded his own photo and was identified by the AI as “Rape Suspect” in the upper left corner of the photo

In the tweet information Kima saw, these tags were accurate, strange, and outrageous, but they were all funny, so he joined. But the result was that the 24-year-old African-American was very unhappy – he uploaded a photo of his own smile, and the site tagged him with “unlawful elements” and “criminals.”

“Maybe I don’t understand humor,” he sent a tweet. “But I didn’t think it was interesting.”

Note: As of press time, the website imagenet-roulette.paglen.com has been offline and is now redirected to www.excavating.ai. The latter page published an article by the founder of the original project, “Excavating Artificial Intelligence: Image Politics in Machine Learning Training”

Behind Artificial Intelligence: Prejudice, Race, and Tired Women

In fact, Kima’s reaction is exactly what this website wants to see. ImageNetRoulette is a digital art project that aims to expose some weird, unfounded, offensive behaviors when artificial intelligence quickly changes personal life. They are spreading into artificial intelligence technologies, including by Internet companies and public security. Facial recognition services widely used by departments and other government agencies.

Face recognition and other AI technologies learn skills by analyzing vast amounts of data from past websites and academic projects that inevitably contain subtle biases and other deficiencies that have not been noticed for years. That’s why American artist Trevor Paglen and Microsoft researcher Kate Crawford launched the ImageNetRoulette project – they want to expose this issue deeper.

“We want to reveal how prejudice, racism, and misogyny can move from one system to another,” Paglen said in a telephone interview: “The focus is on getting people to understand the operations behind the scenes and seeing us.” How it has been processed and classified all the time.”

As part of this week’s exhibition at the Fondazione Prada Museum in Milan, this site focuses on the well-known large visualization database ImageNet. In 2007, researchers led by Li Feifei began to discuss the ImageNet project, which played an important role in the rise of “deep learning,” which enabled machines to recognize images, including human faces.

▲”Training Humans” Photography ExhibitionUnveiled at the Fondazione Prada Museum in Milan, showing how artificial intelligence systems can be watched and trained to classify the world.

ImageNet brings together more than 14 million photos taken from the Internet and explores a way to train AI systems and assess their accuracy. By analyzing a variety of different images, such as flowers, dogs, and cars, these systems can learn how to identify them.

In the discussion about artificial intelligence, one thing that is rarely mentioned is that ImageNet also contains thousands of photos, each of which is classified into a certain category. Some of the labels are straightforward, such as “cheerleaders”, “welding welders” and “boy scouts”; others have obvious emotional colors, such as “losers, hopeless successes, unsuccessful people” and “slaves, sluts, 邋遢 Women, hooligans.”

Paglen and Crawford launched the ImageNetRoulette project with these tags to show how ideas, biases and even offensive perceptions affect artificial intelligence, whether or not they appear harmless.

The spread of prejudice

ImageNet’s tags are used by thousands of anonymous people, most of them from the United States, hired by Stanford’s team. With Amazon Mechanical Turk’s crowdsourcing service, they can earn a few cents for each photo tag, and hundreds of tags per hour. In the process, prejudice is incorporated into the database, although it is impossible for us to know whether these tagged people themselves have such prejudice.

But they define what “losers,” “sluts,” and “criminals” should look like.

These tags came from another huge dataset, WordNet, a machine-readable semantic dictionary developed by researchers at Princeton University. However, the dictionary contains these inflammatory labels, and researchers at Stanford ImageNet may not realize that there is a problem with the research.

Artificial intelligence is usually trained on a large data set, and even its creators cannot fully understand these data sets. “Artificial intelligence is always operating on a very large scale, which has some consequences,” Liz O’Sullivan said. He has been responsible for data tag monitoring at the artificial intelligence startup Clarifai and is now a member of the Civil War and Private Organizations Technical Oversight Program (STOP, full name Surveillance Techonology Oversight Project), which aims to improve people’s artificial intelligence systems. Awareness of the problem.

Many tags in ImageNet data are extremely extreme. However, the same problem can also occur on labels that appear to be “harmless.” After all, even the definitions of “men” and “women” are still open to question.

“When a photo of a woman (whether or not she is an adult) is tagged, she may not include a nonbinary (a person who thinks she is not a binary gender) or a short-haired woman,” O’Sullivan said. “So, the AI model. There are only long-haired women in it.”

In recent months, researchers have found that facial recognition services provided by companies such as Amazon, Microsoft and IBM are biased against women and people of color. Through the IamgeNetRoulette project, Paglen and Crawford hope to draw attention to this issue, and they do. As the project became popular on sites such as Twitter, the ImageNetRoulette project recently generated more than 100,000 tags per hour.

“We didn’t expect it to be popular in this way,” Crawford and Paglen said. “It lets us see people’s true perceptions of the matter and really participate in it.”

After the craze, there are many hidden concerns

For some people, this is just a joke. But others, such as Kima, understand the intent of Crawford and Paglen. “They did a good job, not that I didn’t realize it before, but they exposed the problem,” Kima said.

However, Paglen and Crawford believe that the problem may be more serious than people think.

ImageNet is just one of many data sets. These data sets are reused by technology giants, startups, and academic laboratories to train various forms of artificial intelligence. Any flaws in these databases may have begun to spread.

Today, many companies and researchers are trying to eliminate these drawbacks. In response to prejudice, Microsoft and IBM upgraded facial recognition services. When Paglen and Crawofrod first discussed strange tags in ImageNet in January this year, researchers at Stanford University banned the downloading of all face images in the dataset. Now they say they will delete more face images.

Stanford University research team issued a statement to the New York Times that their long-term goal is “to address fairness, accountability, and transparency in data sets and algorithms.”

But for Paglen, a bigger worry is approaching – artificial intelligence is learned from humans, and human beings are biased creatures. “Our labeling of images is a product of our worldview,” he said. “Any sorting system will reflect the values of the classifiers.”