Deep learning needs to be as popular as programming, not as shelved.

Editor’s note: This article comes from WeChat public account “Ran Caijing” (ID: rancaijing) , author : Kim Yee.

The artificial intelligence products and technologies that have been neglected in the past two years have proved to be not trivial in this epidemic.

Based on this, the market adjusted its forecast for the artificial intelligence market in 2020. According to the statistical forecast data of the China Academy of Information and Communications Technology, the global artificial intelligence market will reach 680 billion yuan in 2020, with a compound growth rate of 26.2%, and China’s artificial intelligence market will also reach 71 billion yuan in 2020, with a compound growth rate of 44.5%.

The sudden epidemic has accelerated the implementation of artificial intelligence in medicine, epidemic control, etc., but also exposed the problem of “partial science”. On the whole, it is not difficult to find that although AI is rapid, the Matthew effect is obvious. Internet giants and artificial intelligence star companies often occupy resource advantages or professionalism, and for companies who want to borrow AI to upgrade, the generation of frameworks has been greatly reduced. threshold.

But the only mainstream open source deep learning frameworks left are Google’s TensorFlow and Facebook’s PyTorch, and domestic developers are highly dependent on foreign open source frameworks.

Many domestic companies are aware of this problem and have come up with localized solutions. Baidu has PaddlePaddle. Huawei is about to open source MindSpore. Yesterday (March 25), domestic computer vision companies despised and announced that open source is based on AI productivity platform Brain ++ ‘s deep learning framework Tianyuan (MegEngine).

According to media reports, Contempo has recently been approved for an IPO in Hong Kong. In response, Contempt responded to Ran Cai, “in (steady) progress.” As the first company in the open source framework camp that was originally used in the artificial intelligence industry, how to tell this story with contempt?

01 framework decentralizes AI

“Although hundreds of algorithms can be used in defiance, the infinite nature of the scene leads to unlimited market demand for the algorithm. And just despising a company cannot do so many things, so There is a good AI infrastructure to help such companies, as well as to help more people create more algorithms. “Tang Wenbin, co-founder and CTO of the company, said at the press conference.

In fact, before 1956, artificial intelligence had begun to breed, but what really made it known to the public was the development of deep learning around 2012. The emergence of the latter led the industry into the “inflection point period”.

FirstLet’s first smooth the relationship between the two.

The purpose of Artificial Intelligence is to make a computer a machine that can think like a human. Machine Learning is a branch of artificial intelligence and one of the fastest growing branches, which is to solve problems by letting computers simulate or implement human learning behaviors. Deep learning is a method of machine learning. Its appearance says goodbye to the way of manually extracting features. It is a high-level abstraction algorithm for data by drawing on the process of layered processing of visual information from the human brain.

In theory, as long as the computing power of the computer is sufficient and the amount of sample data is large enough, the number of layers of the neural network can be continuously increased and the structure of the neural network can be changed, and the effect of the deep learning model will be significantly improved.

In other words, the development of big data has promoted the rise of deep learning, and the method of deep learning has maximized the value of big data. The two complement each other. Especially in the areas where the artificial intelligence industry first landed, such as speech recognition and image recognition, deep learning because of the data feedback in the process of commercial landing, has driven the step-by-step upgrade of computing power and framework.

Processing large amounts of data requires sufficient computing power. In the past decade, the improvement of computer hardware performance, the development of cloud computing, and distributed computing systems have provided sufficient computing power for deep learning.

In the field of deep learning, there are five giants who have their own deep learning frameworks. Google has its own TensorFlow, Facebook has PyTorch, Baidu has Paddle Paddle, Microsoft has CNTK, and Amazon’s AWS has MXNet … p>

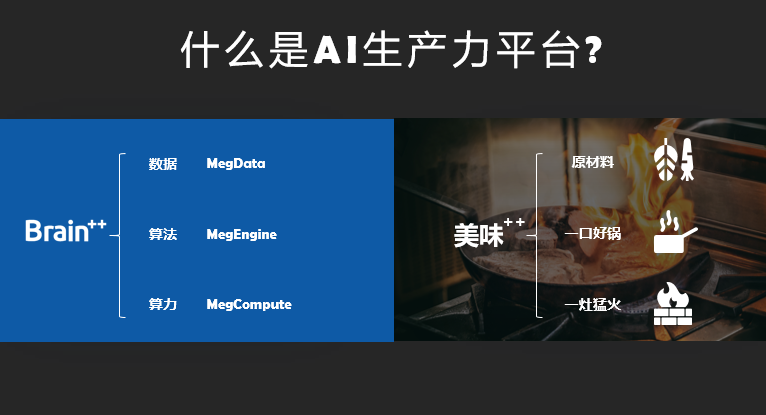

The relationship between data, algorithms, and deep learning frameworks is simply: Algorithms are like cooking. Data is a variety of ingredients and needs to be cleaned. Classification management is cooking ( Making algorithms), and the training of algorithms is like the process of cooking. A good pot (deep learning framework) is needed to carry it. Powerful computing power is a fierce fire for the algorithm. As to how well the food is cooked, it depends to some extent on the control of the fire. The best ingredients, plus a good pot, and sizzling fire can make a good dish.

Similarly, standardized, process-oriented data management, efficient deep learning framework, andPowerful computing power can develop useful algorithms.

The advent of deep learning frameworks has greatly reduced the barriers to entry for developers. It is a tool to help them with deep learning. In simple terms, it is a library required for programming. Developers do not need to write a set of machine learning algorithms from scratch. They can use the existing models in the framework to assemble as needed, but the assembly method depends on the developer; it can also be based on the existing models. Add layers to train your own model.

For algorithm producers, the framework can produce algorithms on a large scale, while reducing data source costs and computing power consumption (cloud service costs) as much as possible. The easy-to-use development tools allow developers to bid farewell to the manual era in the process of algorithm training. Just like the combine harvester is for farmers, the original ten people can only harvest a piece of land with a sickle hoe, and automated and modern harvesting The machine allows one person to complete the harvest of ten plots.

In fact, the cost of cloud services is relatively controllable. In addition, as a necessary data set for the optimization of artificial intelligence algorithms, the more data, the higher the quality of the trained algorithm. When more and more scenes use high quality When the algorithm is used, the greater the commercial value generated, the lower the acquisition cost of the data set will be. This means that for companies that want to use artificial intelligence to upgrade, the creation of a framework can make artificial intelligence “cost-centric.”

Recalling the development of artificial intelligence in the past three or four years, it is accelerating business innovation in various industries and gradually penetrates into retail, education, communications, finance, public utilities, healthcare, smart cities and other fields. However, it is not difficult to find that although the AI is rapid, the Matthew effect is obvious. Internet giants and artificial intelligence star companies often occupy resource advantages or professionalism. In the process of the implementation of artificial intelligence in traditional industries, however, there are many challenges, and high R & D investment and complex algorithm engineering have become a burden.

Although top scientists in the field of artificial intelligence are struggling to be at the forefront of algorithm model research, there are also a large number of manufacturers working hard to promote standardized machine learning algorithms, which objectively reduces the development cost of artificial intelligence algorithms and enables companies from all walks of life to Focus on upper-level business logic. But for traditional enterprises, it is simply not realistic to set up an AI research institute and carry out algorithm development from 0 to 1.

02 What can AI change? The open source framework is offering more options

Which industries will AI change? The answer may be various industries.

Although peopleIndustrial intelligence has begun a new round of explosion due to the introduction of deep learning in 2012. Domestic investment in artificial intelligence began to heat up in 2014, but at that time most investment institutions and the public did not understand artificial intelligence.

Because of a chess game in 2016 (AlphaGo defeated Li Shishi), artificial intelligence officially broke into the public eye and became one of the most heated public events in the world at that time. Investors started researching AI and took the money to find the AI team. Celebrities in the domestic Internet community preached AI at various conferences. Algorithm competition started to be hot, and the courses on artificial intelligence and data mining offered by the school were selected by students, and a large number of “algorithm engineers” poured into the industry.

According to the report released by Wuzhen Think Tank, from 2014 to 2016, the annual investment frequency of China’s artificial intelligence industry increased from nearly 100 times to nearly 300 times, and the scale of financing increased from about 200 million US dollars to 1.6 billion US dollars, an increase of nearly 3 Times and 8 times.

These numbers are starting to spark market concerns about the industry’s “overheating.” At the same time, the cold winter of capital began in 2018, and investment including artificial intelligence has calmed down. After the temperature dropped, the outside world began to focus on the profitability of artificial intelligence companies.

The time has come for 2019, artificial intelligence has maintained a total of nineteen years of soaring financing, and this year it has plummeted. A statistical analysis of Cheetah Global Think Tanks. Since 2000, the amount of financing for artificial intelligence companies has continued to increase for 18 years, a major outbreak occurred from 2013 to 2018, and the amount and amount of financing have skyrocketed. The rate of 50% is increasing; but in 2019 it has become a watershed. Compared with the previous year, the total amount of financing has fallen by 34.8% (from 1484.53 to 96.727 billion), and the amount of financing has fallen by 40% (from 737 to 431).

![Why did you despise joining the]()

![Why did you despise joining the]()

This year has been recognized as the cold winter of artificial intelligence. The capital market of artificial intelligence has begun to calm down. The artificial intelligence industry has also entered the stage of “de-fake”. Adventurers who do not have core technology but want to pan for gold at the tuyere have retreated. But looking backFor years, the core of AI’s being questioned was nothing more than less than expected. Looking back at the last technological revolution, it is not the steam engine itself that changed our production and life, but steam-powered spinning machines, trains, ships, etc., but at present, deep learning is far from enough. The landing direction, the so-called industry outlook is also difficult to confirm.

Looking at this issue from another angle, decades ago, major computer manufacturers actively built their own ecosystems, and ushered in a vigorous information revolution. Now we are in a situation where deep learning is the main force. A wave of artificial intelligence. So since the application of neural networks has basically landed in areas such as face recognition, the remaining work should focus on areas where commercial use is currently not ideal, and the required talents are no longer algorithmic scientists or engineers in deep learning, and Software engineers, hardware engineers, and mechanical engineers who understand deep learning in the industry.

This requires deep learning to be as pervasive as programming, not as shelved. In fact, the basic platforms and tools, that is, frameworks, have been built on the basis of Caffe, Torch, and Theano from the academic world, and now TensorFlow, which is led by technology giants in the industry.

From a global perspective, the pattern of artificial intelligence is unclear, but players are mainly divided into three factions.

The first category is the system application school, typically represented by Google and Facebook, who have developed system-level frameworks for artificial intelligence, such as Google’s artificial intelligence box TensorFlow, Facebook’s PyTorch, and are widely used in applications. The second category is chip pie. Intel and Nvidia are the important players, which mainly provide algorithm support. The third category is the technology application group. Most of the so-called artificial intelligence companies currently belong to this group.

From the perspective that the framework is increasingly corresponding to production and industrial applications, the industry is answering the question “What can AI do for us?”

03 What Kuang deems to join the battle of open source deep frameworks

The competition for deep learning frameworks has become the commanding heights of competition in the field of artificial intelligence. This is why there are always manufacturers willing to invest a lot of resources to design new frameworks to try to solve these shortcomings, and more importantly, strive for the standards of deep learning, so as to dock the chip downwards, support various applications upwards, and expand the territory.

But the current mainstream open source deep learning frameworks are Google’s TensorFlow and Facebook’s PyTorch, both of which occupy most of the market share. The former was born around 2012, and then firmly occupied the industrial world with stable performance and security. Google hopes to open source more frameworks to allow more user companies and users to bind their own basic products and chips.And cloud services equipped with chips, from the framework to the development of underlying facilities. The late entrant PyTorch opened a crack in the academic world through simple operation and flexibility, and gradually converged with the former.

Xu Chenggang, a professor of economics and director of the Center for Artificial Intelligence and Institutional Research of the Changjiang Business School, concluded in a sharing in early 2019 that in the last three years, the total number of open source software packages focusing on artificial intelligence in China has risen rapidly and exceeded in the fall of 2017. The United States; however, Almost 93% of Chinese researchers use open source software packages for artificial intelligence developed by institutions in the United States. The most used software package for artificial intelligence researchers in China and the United States is Google TensorFlow developed.

The open source software package is an indicator of the degree of artificial intelligence research and development. In the field of artificial intelligence, chips represent computing power, and intelligent frameworks represent algorithms. Algorithms and computing power are the two cornerstones. If domestic developers rely heavily on foreign open source frameworks, it is equivalent to being “stuck in the neck.”

A similar situation has happened in the field of chips. In 2015, the supercomputer “Tianhe 2” led by the National University of Defense Technology had to interrupt the original upgrade plan because Intel interrupted the supply of supercomputer chips. It was not completed until 2018 with the help of China ’s self-developed Matrix-2000 accelerator card upgrade.

Many domestic companies are aware of this problem and have come up with localized solutions. Baidu open sourced PaddlePaddle in the second half of 2016. Huawei said in August last year that it plans to open source MindSpore in Q1 of 2020. On March 25th, contempt announced the open source of its core deep learning framework Tian ++ MegEngine, its AI productivity platform Brain ++, and also opened the Brain ++ product to enterprise users.

Since the frameworks are all open source, do domestic technologies need to repeat their own research?

The answer is yes. Because artificial intelligence is not a theory in the ivory tower, it must be oriented to real scenarios and applied to actual business. Tech companies that open source deep learning frameworks have their own unique business scenarios and problems. (Baidu) PaddlePaddle has accumulated a lot in natural language processing. (Huawei) MindSpore emphasizes the ability of software and hardware coordination and mobile terminal deployment, while (despised) Tianyuan MegEngine emphasizes the integration of training and inference and the integration of dynamic and static, and is compatible PyTorch.

Open source

The competition for deep learning frameworks has become the commanding heights of competition in the field of artificial intelligence. This is why there are always manufacturers willing to invest a lot of resources to design new frameworks to try to solve these shortcomings, and more importantly, strive for the standards of deep learning, so as to dock the chip downwards, support various applications upwards, and expand the territory.

But the current mainstream open source deep learning frameworks are Google’s TensorFlow and Facebook’s PyTorch, both of which occupy most of the market share. The former was born around 2012, and then firmly occupied the industrial world with stable performance and security. Google hopes to open source more frameworks to allow more user companies and users to bind their own basic products and chips.And cloud services equipped with chips, from the framework to the development of underlying facilities. The late entrant PyTorch opened a crack in the academic world through simple operation and flexibility, and gradually converged with the former.

Xu Chenggang, a professor of economics and director of the Center for Artificial Intelligence and Institutional Research of the Changjiang Business School, concluded in a sharing in early 2019 that in the last three years, the total number of open source software packages focusing on artificial intelligence in China has risen rapidly and exceeded in the fall of 2017. The United States; however, Almost 93% of Chinese researchers use open source software packages for artificial intelligence developed by institutions in the United States. The most used software package for artificial intelligence researchers in China and the United States is Google TensorFlow developed.

The open source software package is an indicator of the degree of artificial intelligence research and development. In the field of artificial intelligence, chips represent computing power, and intelligent frameworks represent algorithms. Algorithms and computing power are the two cornerstones. If domestic developers rely heavily on foreign open source frameworks, it is equivalent to being “stuck in the neck.”

A similar situation has happened in the field of chips. In 2015, the supercomputer “Tianhe 2” led by the National University of Defense Technology had to interrupt the original upgrade plan because Intel interrupted the supply of supercomputer chips. It was not completed until 2018 with the help of China ’s self-developed Matrix-2000 accelerator card upgrade.

Many domestic companies are aware of this problem and have come up with localized solutions. Baidu open sourced PaddlePaddle in the second half of 2016. Huawei said in August last year that it plans to open source MindSpore in Q1 of 2020. On March 25th, contempt announced the open source of its core deep learning framework Tian ++ MegEngine, its AI productivity platform Brain ++, and also opened the Brain ++ product to enterprise users.

Since the frameworks are all open source, do domestic technologies need to repeat their own research?

The answer is yes. Because artificial intelligence is not a theory in the ivory tower, it must be oriented to real scenarios and applied to actual business. Tech companies that open source deep learning frameworks have their own unique business scenarios and problems. (Baidu) PaddlePaddle has accumulated a lot in natural language processing. (Huawei) MindSpore emphasizes the ability of software and hardware coordination and mobile terminal deployment, while (despised) Tianyuan MegEngine emphasizes the integration of training and inference and the integration of dynamic and static, and is compatible PyTorch.

Open source